DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

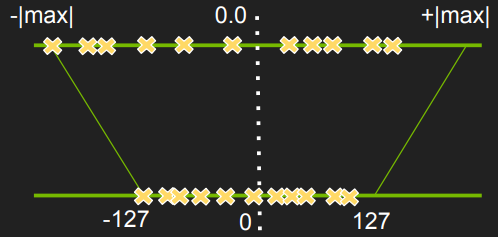

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

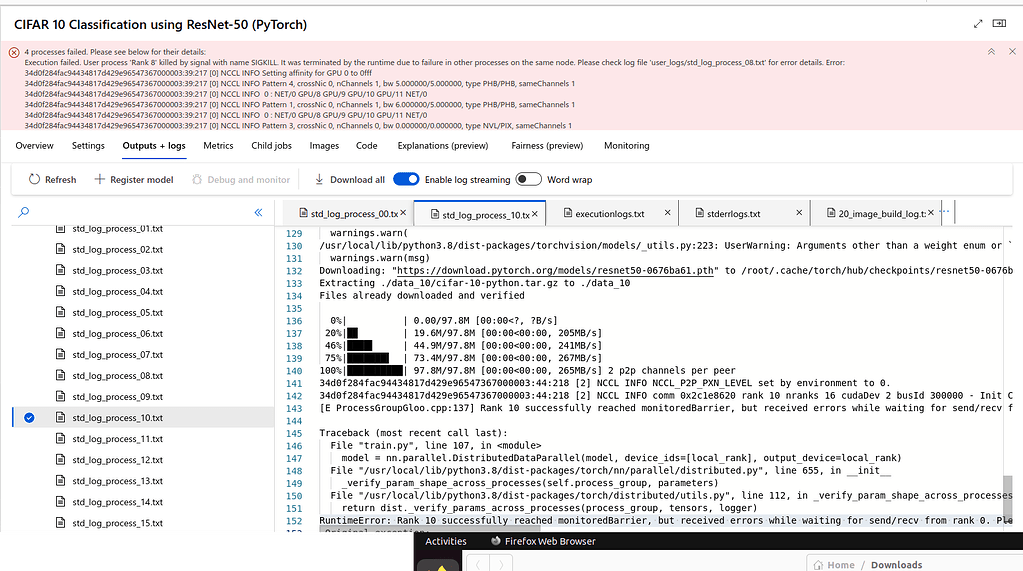

PyTorch DDP -- RuntimeError: Rank 10 successfully reached

Question/Possible bug with ddp/DistributedDataParallel accessing a

torch.distributed.barrier Bug with pytorch 2.0 and Backend=NCCL

Don't understand why only Tensors of floating point dtype can

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Cannot convert a MPS Tensor to float64 dtype as the MPS framework

Inplace error if DistributedDataParallel module that contains a

DistributedDataParallel non-floating point dtype parameter with

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Distributed Data Parallel and Its Pytorch Example

Issue for DataParallel · Issue #8637 · pytorch/pytorch · GitHub

Rethinking PyTorch Fully Sharded Data Parallel (FSDP) from First

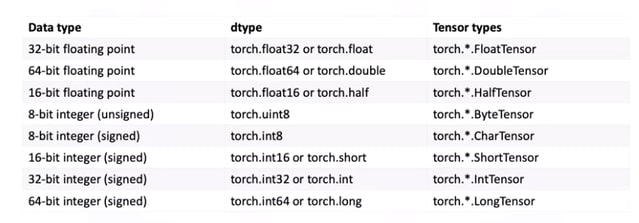

Introduction to Tensors in Pytorch #1

pytorch/torch/nn/parallel/distributed.py at main · pytorch/pytorch

Increase YOLOv4 object detection speed on GPU with TensorRT